AdaBoost Gradient Boosting and XGBoost are three algorithms that do not get much recognition. In addition Chen Guestrin introduce shrinkage ie.

A Comparitive Study Between Adaboost And Gradient Boost Ml Algorithm

The algorithm is similar to Adaptive BoostingAdaBoost but differs from it on certain aspects.

. I learned that XGboost uses newtons method for optimization for loss function but I dont understand what will happen in the case that hessian is nonpositive-definite. In this algorithm decision trees are created in sequential form. This algorithm is an improved version of the Gradient Boosting Algorithm.

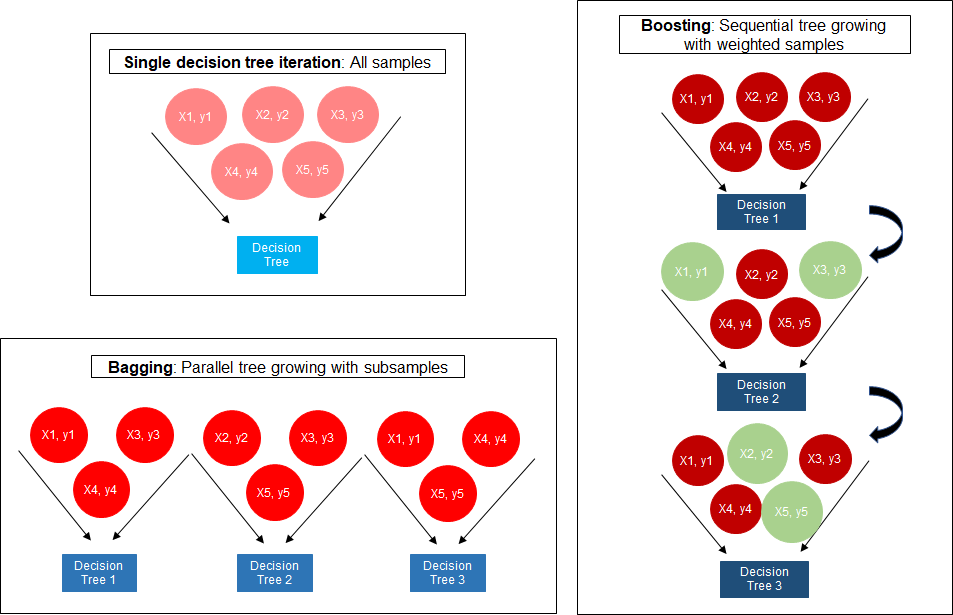

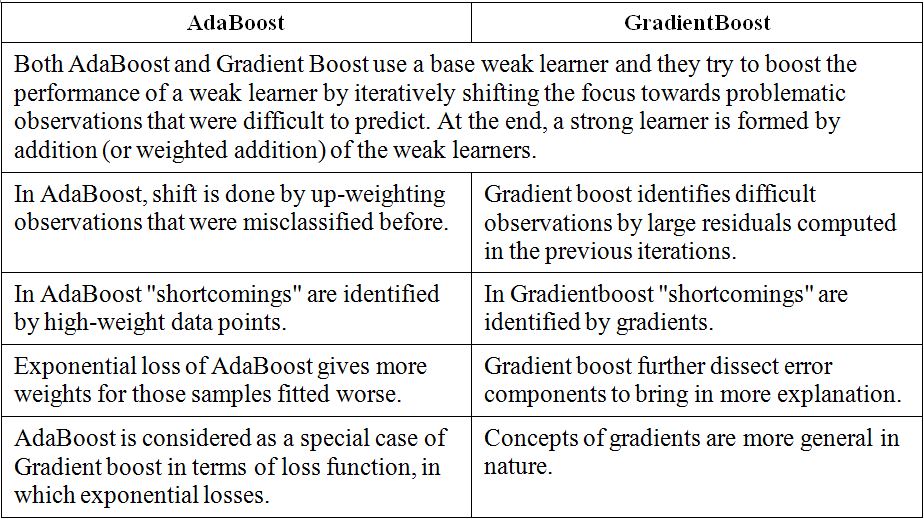

Difference between Gradient boosting vs AdaBoost Adaboost and gradient boosting are types of ensemble techniques applied in machine learning to enhance the efficacy of week learners. Neural networks and Genetic algorithms are our naive approach to imitate nature. Share to Twitter Share to Facebook Share to Pinterest.

Gradient boosting only focuses on the variance but not the trade off between bias where as the xg boost can also focus on the regularization factor. We can use XGBoost to train the Random Forest algorithm if it has high gradient data or we can use Random Forest algorithm to train XGBoost for its specific decision trees. The concept of boosting algorithm is to crack predictors successively where every subsequent model tries to fix the flaws of its predecessor.

A learning rate and column subsampling randomly selecting a subset of features to this gradient tree boosting algorithm which allows further reduction of overfitting. If it is set to 0 then there is no difference between the prediction results of gradient boosted trees and XGBoost. It has quite effective implementations such as XGBoost as many optimization techniques are adopted from this algorithm.

There is a technique called the Gradient Boosted Trees whose base learner is CART Classification and Regression Trees. Mathematical differences between GBM XGBoost First I suggest you read a paper by Friedman about Gradient Boosting Machine applied to linear regressor models classifiers and decision trees in particular. A benefit of using ensembles of decision tree methods like gradient boosting is that they can automatically provide estimates of feature importance from a trained predictive model.

At each boosting iteration the regression tree minimizes the least squares approximation to the. AdaBoost Adaptive Boosting AdaBoost works on improving the. Generally XGBoost is faster than gradient boosting but gradient boosting has a wide range of application.

However the efficiency and scalability are still unsatisfactory when there are more features in the data. XGBoost is faster than gradient boosting but gradient boosting has a wide range of applications. XGBoost uses advanced regularization L1 L2 which improves model generalization capabilities.

XGBoost is more regularized form of Gradient Boosting. XGBoost uses advanced regularization L1 L2 which improves model generalization capabilities. Difference between GBM Gradient Boosting Machine and XGBoost Extreme Gradient Boosting Posted by Naresh Kumar Email This BlogThis.

XGBOOST stands for Extreme Gradient Boosting. Boosting is a method of converting a set of weak learners into strong learners. XGBoost delivers high performance as compared to Gradient Boosting.

Gradient Boosting is also a boosting algorithm hence it also tries to create a strong learner from an ensemble of weak learners. I think the difference between the gradient boosting and the Xgboost is in xgboost the algorithm focuses on the computational power by parallelizing the tree formation which one can see in this blog. Base_estim DecisionTreeClassifiermax_depth1 max_features006 ab AdaBoostClassifierbase_estimatorbase_estim n_estimators500 learning_rate05.

This tutorial will explain boosted trees in a self. A Gradient Boosting Machine by Friedman. The latter is also known as Newton boosting.

Boosting algorithms are iterative functional gradient descent algorithms. The base algorithm is Gradient Boosting Decision Tree Algorithm. XGBoost trains specifically the gradient boost data and gradient boost decision trees.

They work well for a class of problems but they do. The gradient boosted trees has been around for a while and there are a lot of materials on the topic. XGBoost is more regularized form of Gradient Boosting.

The training methods used by both algorithms is different. What are the fundamental differences between XGboost and gradient boosting classifier from scikit-learn. If you are interested in learning the differences between Adaboost and gradient boosting I have posted a link at the bottom of this article.

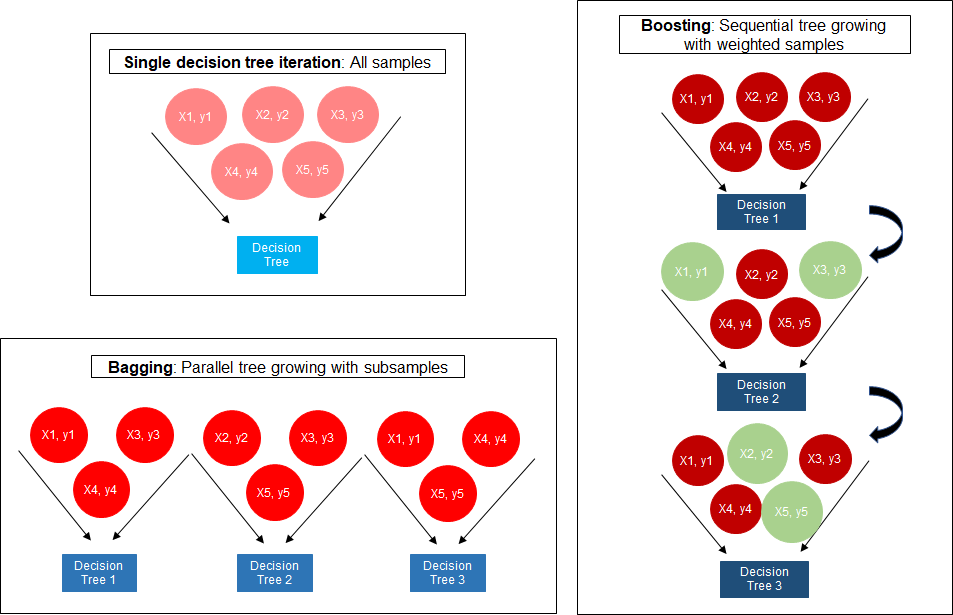

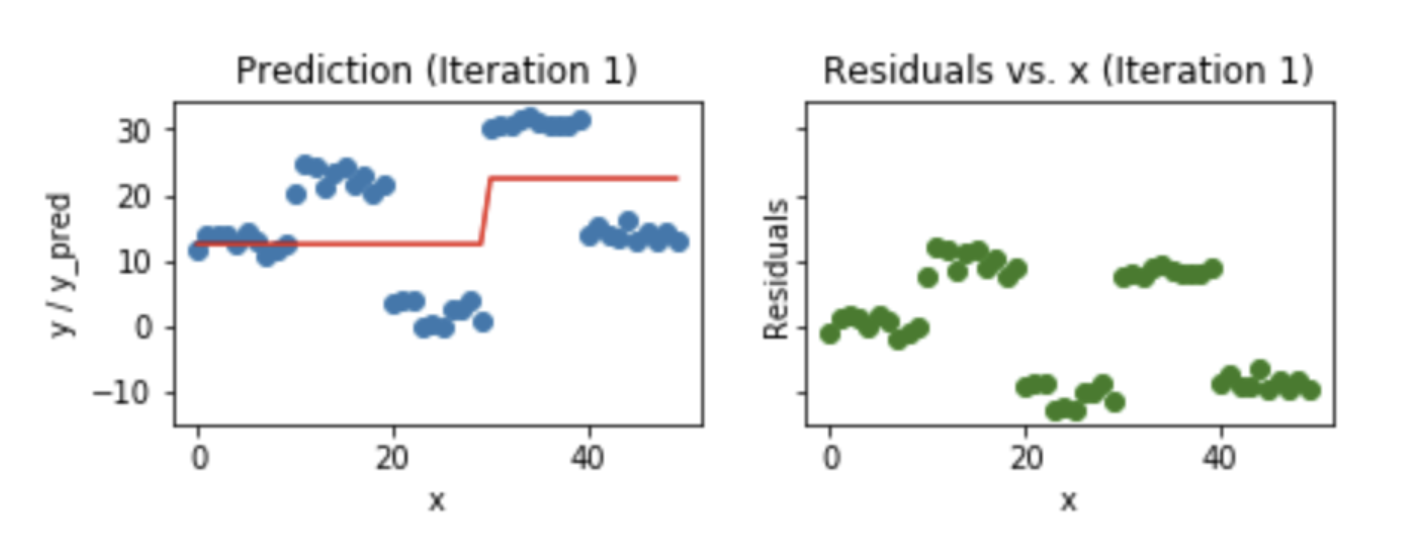

After reading this post you. Gradient boosting is a technique for building an ensemble of weak models such that the predictions of the ensemble minimize a loss function. Introduction to Boosted Trees.

XGBoost stands for Extreme Gradient Boosting where the term Gradient Boosting originates from the paper Greedy Function Approximation. Algorithms Ensemble Learning Machine Learning. What is the difference between gradient boosting and XGBoost.

Its training is very fast and can be parallelized distributed across clusters. Its training is very fast and can be parallelized distributed across clusters. I think the Wikipedia article on gradient boosting explains the connection to gradient descent really well.

Gradient Boosting Decision Tree GBDT is a popular machine learning algorithm. A very popular and in-demand algorithm often referred to as the winning algorithm for various competitions on different platforms. GBM uses a first-order derivative of the loss function at the current boosting iteration while XGBoost uses both the first- and second-order derivatives.

XGBoost computes second-order gradients ie. XGBoost is more regularized form of Gradient Boosting. 3 rows Difference between Gradient Boosting and Adaptive BoostingAdaBoost.

R package gbm uses gradient boosting by default. AdaBoost Gradient Boosting and XGBoost. XGBoost uses advanced regularization L1 L2 which improves model generalization capabilities.

Originally published by Rohith Gandhi on May 5th 2018 42348 reads. XGBoost delivers high performance as compared to Gradient Boosting. The different types of boosting algorithms are.

XGBoost models majorly dominate in many Kaggle Competitions. XGBoost is an implementation of Gradient Boosted decision trees. Weights play an important role in.

I have several qestions below. In this post you will discover how you can estimate the importance of features for a predictive modeling problem using the XGBoost library in Python.

Xgboost Versus Random Forest This Article Explores The Superiority By Aman Gupta Geek Culture Medium

Xgboost Vs Lightgbm How Are They Different Neptune Ai

The Ultimate Guide To Adaboost Random Forests And Xgboost By Julia Nikulski Towards Data Science

Boosting Algorithm Adaboost And Xgboost

Gradient Boosting And Xgboost Hackernoon

Gradient Boosting And Xgboost Hackernoon

Gradient Boosting And Xgboost Note This Post Was Originally By Gabriel Tseng Medium

Comparison Between Adaboosting Versus Gradient Boosting Statistics For Machine Learning

0 comments

Post a Comment